Here is my test app:

#include <Wire.h>

long lastms;

volatile int x = 0;

volatile int y = 0;

#define STM

void receiveEvent(int howMany) {

x = Wire.read();

}

void requestEvent() {

y = 1;

}

void setup() {

Serial.begin(115200);

#ifdef STM

//STM32F103

pinMode(PB6, INPUT); //PB6=SCL

pinMode(PB7, INPUT); //PB7=SDA

Wire.setSCL(PB6);

Wire.setSDA(PB7);

#elif

//Arduino Nano

pinMode(A4, INPUT);

pinMode(A5, INPUT);

//Wire.setSDA(A4);

//Wire.setSCL(A5);

#endif

Wire.begin(11); // join i2c bus as slave

//Wire.onRequest(requestEvent);

Wire.onReceive(receiveEvent);

lastms = millis();

}

void loop() {

if (millis() > lastms + 1000) {

Serial.print("-");

lastms = millis();

}

if (x != 0) {

Serial.print("U:0x");

Serial.println(x, HEX);

x = 0;

}

if (y != 0) {

Serial.println("V");

y = 0;

}

}

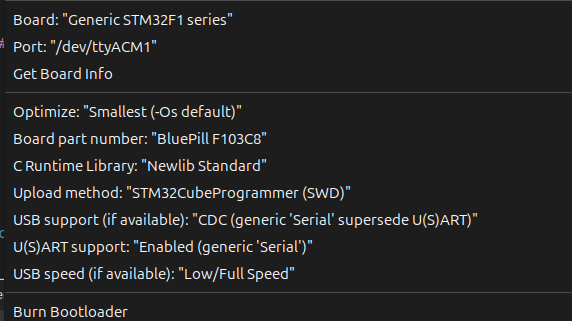

If compiled for Nano, the application works. (displaying the incoming I2C requests.)

When compiled for STM32F103 - with CDC serial, it does not. (it outputs only the "-" each 1sec.)

The incoming data is at the same time decoded by an oscilloscope, so pullups etc are ok.

On incoming I2C data, PB6 (SCK) is being pulled low, and held low by the controller, effectively killing all further traffic.

This happens even if USB support is set to NONE