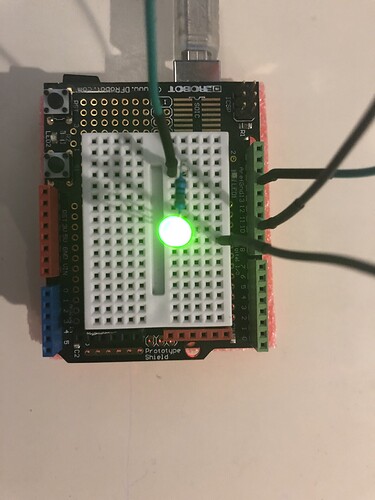

Hello, I am a beginner Arduino user. I recently picked up the hobby and this is my first forum post. I have a beginner kit by DFROBOT called "Beginner Kit for Arduino." This kit includes an Arduino Uno. I am currently on the 4th project of this kit, which is about an LED fading on and off (picture of circuit attached), and I found something interesting. In the following code, it takes one second (1000ms) to fade an LED on and another second to fade it off, and it keeps looping, which is expected.:

int ledPin = 10;

void setup() {

pinMode(ledPin,OUTPUT);

}

void loop(){

fadeOn(1000,5);

fadeOff(1000,5);

}

void fadeOn(unsigned int time,int increment){

for (unsigned char value = 0 ; value < 255; value+=increment){

analogWrite(ledPin, value);

delay(time/(255/increment));

}

}

void fadeOff(unsigned int time,int decrement){

for (unsigned char value = 255; value >0; value-=decrement){

analogWrite(ledPin, value);

delay(time/(255/decrement));

}

}

//You will see the LED getting brighter and fading constantly after uploading the code.

Me being me, I decided to mess around with the increment/decrement values, which is when I noticed something strange with the speed of the fade in and out. Looking at the code, no matter what the increment/decrement value is, each fade in and fade out should take one second, right?

Well, I found out different factors of 255 to use for the increment/decrement values and conducted a short experiment. I tried different factors of 255 as the increment/decrement values and timed how long it took for the Arduino to complete the cycle of fading into bright and then fading off 10 times. Strictly following the code, 10 on and offs should take 20 seconds no matter what the increment/decrement value is, because a fade to bright was given 1000 ms and the fade to off was given 1000ms as well. Repeating a 2 second fade on/off cycle ten times should result in a 20 second total.

After a couple trials, I found that when I use factors of 255 greater than or equal to 5 as the increment/decrement value, it takes the expected 20 seconds to fade on and off 10 times. However, when the number 1 was used as the increment/decrement value, it took approximately 15 seconds to finish the 10 fade cycles, and that makes no sense! The following code takes 15 seconds for 10 fade cycles instead of the 20 seconds expected. If you look at the code and do the math, it should still take 20 seconds for 10 fade cycles:

int ledPin = 10;

void setup() {

pinMode(ledPin,OUTPUT);

}

void loop(){

fadeOn(1000,1);

fadeOff(1000,1);

}

void fadeOn(unsigned int time,int increment){

for (unsigned char value = 0 ; value < 255; value+=increment){

analogWrite(ledPin, value);

delay(time/(255/increment));

}

}

void fadeOff(unsigned int time,int decrement){

for (unsigned char value = 255; value >0; value-=decrement){

analogWrite(ledPin, value);

delay(time/(255/decrement));

}

}

//You will see the LED getting brighter and fading constantly after uploading the code.

To test this further, I made a separate sketch and used digital writes instead of analog writes to see if analog write was causing a problem. In the following code, it once again takes 15 seconds for what should've taken 20 seconds, so it wasn't a problem with the analog write, but some sort of issue with delay functions inside the for loop:

int ledPin = 10;

void setup() {

pinMode(ledPin,OUTPUT);

}

void loop(){

digitalWrite(ledPin,1);

fadeOff(1000,1); //This is supposed to be just a 1 second delay, i commented out the analogwrite portion in the function definition.

digitalWrite(ledPin,0);

fadeOn(1000,1); //This is supposed to be just a 1 second delay, i commented out the analogwrite portion in the function definition.

}

void fadeOn(unsigned int time,int increament){

for (unsigned char value = 0 ; value < 255; value+=increament){

//analogWrite(ledPin, value);

delay(time/(255/increament));

}

}

void fadeOff(unsigned int time,int decreament){

for (unsigned char value = 255; value >0; value-=decreament){

//analogWrite(ledPin, value);

delay(time/(255/decreament));

}

}

//Even with digitalwrite, it still takes 15 seconds to complete 10 on and off cycles.

//Theoretically, should've taken 20 seconds, not 15.

Thanks for bearing with me! Could anyone please tell me what's going on? What is the problem? The math checked out, so why does an on and off fade cycle with an increment/decrement value of 1 take 15 seconds instead of 20? I hypothesize that the Arduino simply can't keep up with 255 loops of code in just one second especially with delay functions in each of them. Yet, as I mentioned earlier I'm just a beginner, so I can't draw any educated conclusions. It would be great if someone with more experience than me could try to figure this out, because frankly, I'm quite curious! ![]()

(Note FYI: I use the create.arduino.cc online IDE to code my Arduino Uno)