Hey guys,

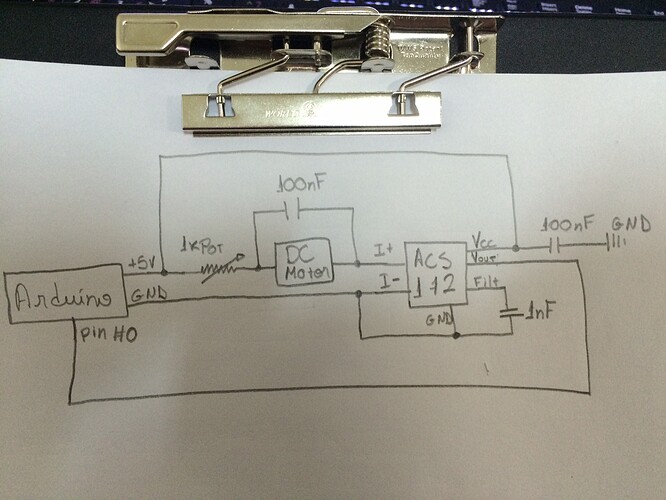

I'm using the schematics attached below to start testing the ACS172, 5A version. Basically, I'm just varying the potentiometer in order to check if current and Vout vary according to the sensor's sensitivity(185mV/A). Apparently this is fine, since when I vary the current supplied to the load, Vout responds accordingly. However, when I check Vout with a multimeter, I get different values than what I get via serial monitor, using the code below. (e.g. For I = 0A, I get Vout = 2.5V in the serial monitor and Vout = 2.25V in the multimeter).

int adcZero = 511;

int adcRaw, outputVoltage, dcCurrent;

//...

adcRaw = analogRead(0);

outputVoltage = adcRaw*(0.0048828125); //In Volts, considering a 10-bit adc

dcCurrent = (adcRaw-adcZero)*0.0264; //In Amps, considering a 185mV/A sensitivity

print(outputVoltage);

//...

Should I just ignore this? According to the datasheet, for 0V I should get 2.5V as my Vout and this is what I get in the Serial Monitor.

PS.: My multimeter is fine.

Thanks guys!